YeOldeStonecat

Well-Known Member

- Reaction score

- 6,919

- Location

- Englewood Florida

Falls to defaults

Falls to defaults

So what is the situation when there is no retention policy specified .. at all?

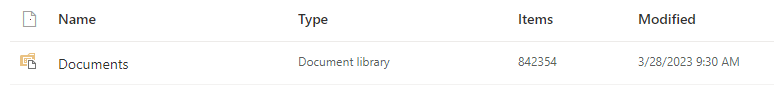

View attachment 14451

I have an Archive team, I move all sharepoint stuff that I need out of there to it, and it's configured on someone's desktop somewhere to syncall to it. I have to verify that machine has it all, move everything into other storage of some kind, then delete it from the team, and go online and empty the sharepoint recycle bin for that team.

This makes perfect sense.....as long as you have the ability to make the call for this step:

move all sharepoint stuff that I need out of there to it (emphasis mine)

When trying to manage this for clients, only they can really know "the stuff they need out of there" - i.e. what data can be archived. A big issue here is that there aren't many built-in tools to help identify this data. You contact the client, tell them that they are running out of space and they ask you to: "Make me a list of all files that haven't been accessed in 5 years". Maybe you can do that in powershell, I don't know. Then they ask "Ok, go ahead and archive that". Well, it's not that simple. Presumably they don't just want random old files put in a single archive folder on some storage service. You would need to maintain a similar folder tree on that service so they could actually find that data if some day they needed it.

THAT means you would need to create "X:\Clients\R\RDC001\Projects\2016" folders there to accept the data. I know I wouldn't want that job unless it could be automated, but can it? If you can't automate it, then this is a project that will never be done consistently.

Don't forget also that it's not just file sizes that are a problem. I can image many folks would run into the limitation on NUMBER of files before they hit the space limit.

This entire issue is largely unknown by the average client, and it is a difficult task for us to get them to assign value to, enough to do anything about it until limits are hit and there are problems.

It looks like we are well within the 30 million items limitation?

if their practices were poor in how they handled storing data.

Digital hoarding eventually comes back to bite those that do it in the posterior.