Markverhyden

Well-Known Member

- Reaction score

- 11,031

- Location

- Raleigh, NC

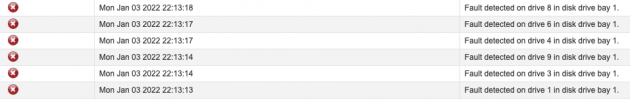

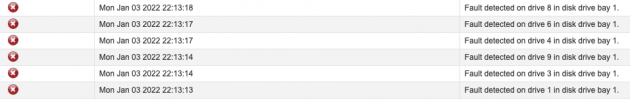

Noticed my email server (R730XD) was offline this AM when I got up. Check via web and still not working. So I go to the network room and got a bunch drives offline, 6 out of 12. Of course this is more than panicking. Doing some more investigation and all the offline drives are marked foreign. More digging and, while unusual, one Dell rep said that one drive can fail and in turn cause other drives to go offline due to bad writes. In this case, after reseating, all drives came back but still foreign. So I bite the bullet and reboot telling it to import all foreign drives. Luckily ESXi came back up but the email server was corrupted so I had to restore from a backup. Fortunately no other VM was running so they should be ok. Has anybody heard of such a thing? This happened 2-3 years ago on a different server.